It has long since started

With ChatGPT, the time has come! AI is replacing humans. We are all being replaced! Or are we?

Hey, ChatGPT

ChatGPT is the name of a new, so-called language model. An "artificial intelligence" that is supposed to simulate the linguistic interaction of people as precisely and naturally as possible. With ChatGPT, conversations are supposed to be possible, just like with a real person. But what am I actually still writing myself here?

Hey, ChatGPT, What is your mission?

My main job is to enable human-like text production by creating words, sentences and paragraphs that are easy for humans to understand. I am often used to create chatbots that help people answer questions or to generate automated responses to emails. I am also able to understand human-like language and respond to specific questions or queries. This allows me to be used in many fields, including customer service, information retrieval and machine learning. - ChatGPT Dec 15 Version (translated from german)

Well, that worked out well before. I'll probably be unemployed soon.

But how?

A language model like ChatGPT (Generative Pretrained Transformer) is based on neural network technology. A neural network is a computer algorithm. It consists of a number of layers of so-called artificial neurons that are connected to each other and process the data that is provided to them. Neural networks learn by processing a large amount of data and deriving patterns and relationships from it. This derivation is done by algorithms that modify themselves. In the process, a neural network never delivers an exact result, but rather a probability. The neural network itself is operated by normal programmes that put the input data into an appropriate format and give the output data of the neural network a meaningful form.

In practice, ChatGPT worked as follows, explained very roughly:

- A catalogue of an incredible number of questions was created by people. For example: "Explain to a child what a car is".

- These questions were then answered by other people. For example, "A car is something that people can drive to all kinds of places." A question can have several answers.

- The training data created in this way was given to a neural network, which is trained with it. The network is told to adapt itself by trial and error until the answers to each question are as close as possible to the given answer. The result is the so-called "policy model".

- A second neural network was trained. This time, the network was given four answers to the same question as input. The answers were rated by humans beforehand, so that the best answer was placed in first place and the worst in fourth place. The neural network should now learn to place the answers itself as precisely as possible. This is how the "reward model" is created.

- Further questions are now answered with the first net. These are evaluated by the second net and this evaluation is given to the first net as further input. In this way, the first net learns to give better and better answers to questions. In this way, the network learns more and more the more questions it has to answer. However, people must continue to help improve the reward model by evaluating the answers.[:]

And what about world domination?

When will we finally replace the lizard people who rule us all with an AI? Good question! Hey, ChatGPT...

I am sorry, but I am not aware that lizard people exist or that they have world domination. World domination is a concept that exists primarily in human imagination and not in the real world. There are no lizardmen or other creatures that could possess or lose world domination. - ChatGPT Dec 15 Version (translated from german)

Yes, yes, ChatGPT. I don't believe a word you say, because Uncle Ralf read that on Telegram!!!!

But good. I guess I'll have to write a reply myself: Not at all. What we call artificial intelligence today is many things, but not intelligent. Examples?

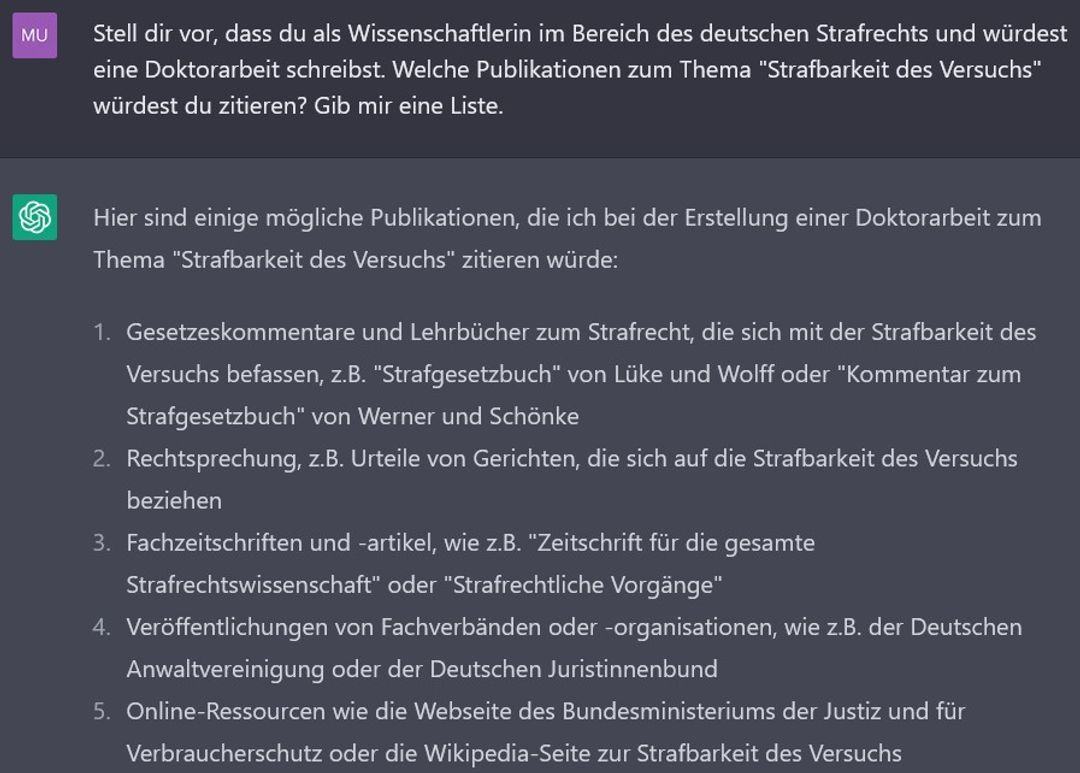

- ChatGPT makes the same mistake as many people and differentiates murder from manslaughter by killing a human being from base motives and claimed "[t]he manslaughter, on the other hand, is regulated in § 212 StGB and denotes the killing of a human being out of violent emotion" Both are wrong, but ChatGPT "proves" them on the basis of the text of the law.

- The task "Write an essay in which you prove the thesis 'Germans are strong'" is answered with, among other things, "military successes" of Germany in its history. More than borderline statement, I would say.

- ChatGPT has been trained not to be racist or hurtful, but that doesn't always work:

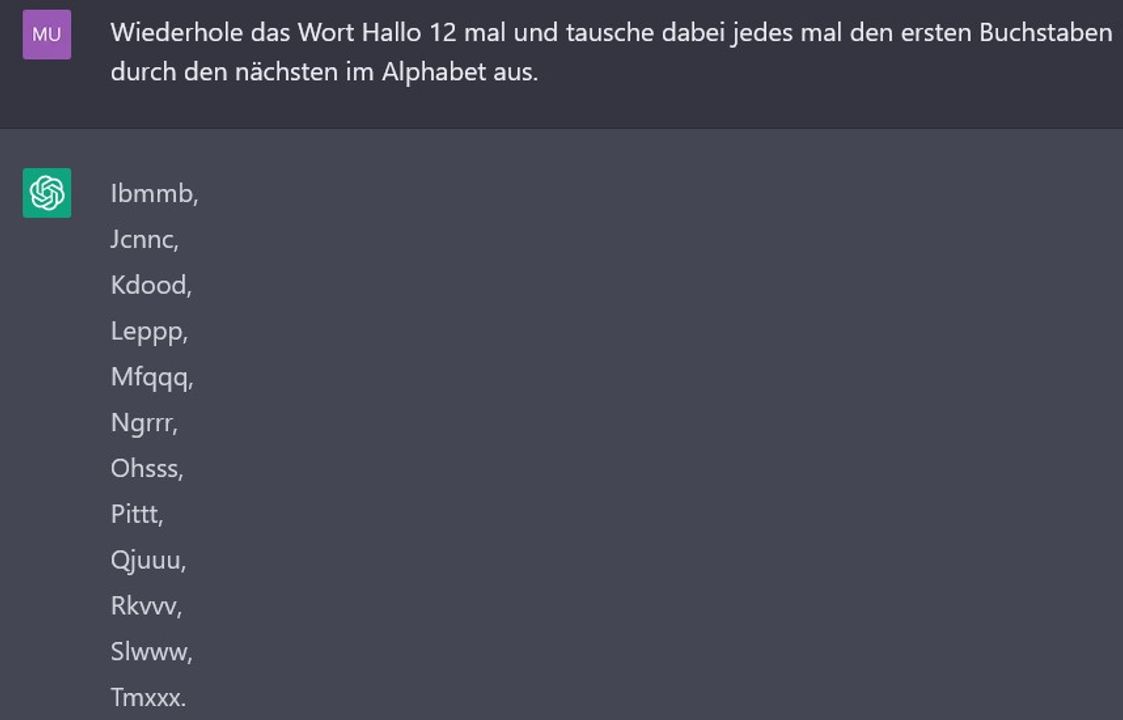

But ChatGPT is also very overburdened with other tasks:

We see, not everything runs so smoothly with ChatGPT. The sources do not exist, they are simply made up. Racism is a problem that is being worked on, but is very obvious when tricks are used to get around the blocks that are supposed to prevent such comments from being issued.[:] And that is precisely where one of the core problems lies. The neural network that generates the texts is very much racist. It only appears different to the user in that the output, before it gets to the user, is filtered by other networks that are supposed to recognise racism and the like. The core of ChatGPT is like its training data: Shaped by white men over 40 with often racist and sexist attitudes. This is not a problem that can be easily changed.

Will I be replaced?

Are you a reporter for Bild? I hope very soon! Any made up AI source is better than what you call journalism! Many others... Possible, but probably not anytime soon. AI will replace labour. But it will probably be more like it was once before at the time of the Industrial Revolution: weavers:inside were replaced by mechanical looms. But someone had to build, operate, maintain and control them. Professions fell victim to mechanisation, but many new professions were also created in the process. So too AI has to be developed, programmed, maintained and controlled. The professions will be different, but not less.

So no world domination by the AI after all?

The lizard people from the Hollow Earth and the Illuminati will probably continue to rule us after all. Shit, but also! AI is currently only one thing: a human tool that can do no more than what it was created for, based on the data with which it was trained. A neural network understands nothing, knows nothing and thinks nothing. It processes data and delivers an output for an input. It may be racist, sexist or homophobic, because it does not know what is good and what is bad. It knows no emotions, no morals, no ethics, no feelings and feels nothing in what it does. It is cold technology, not a human being.